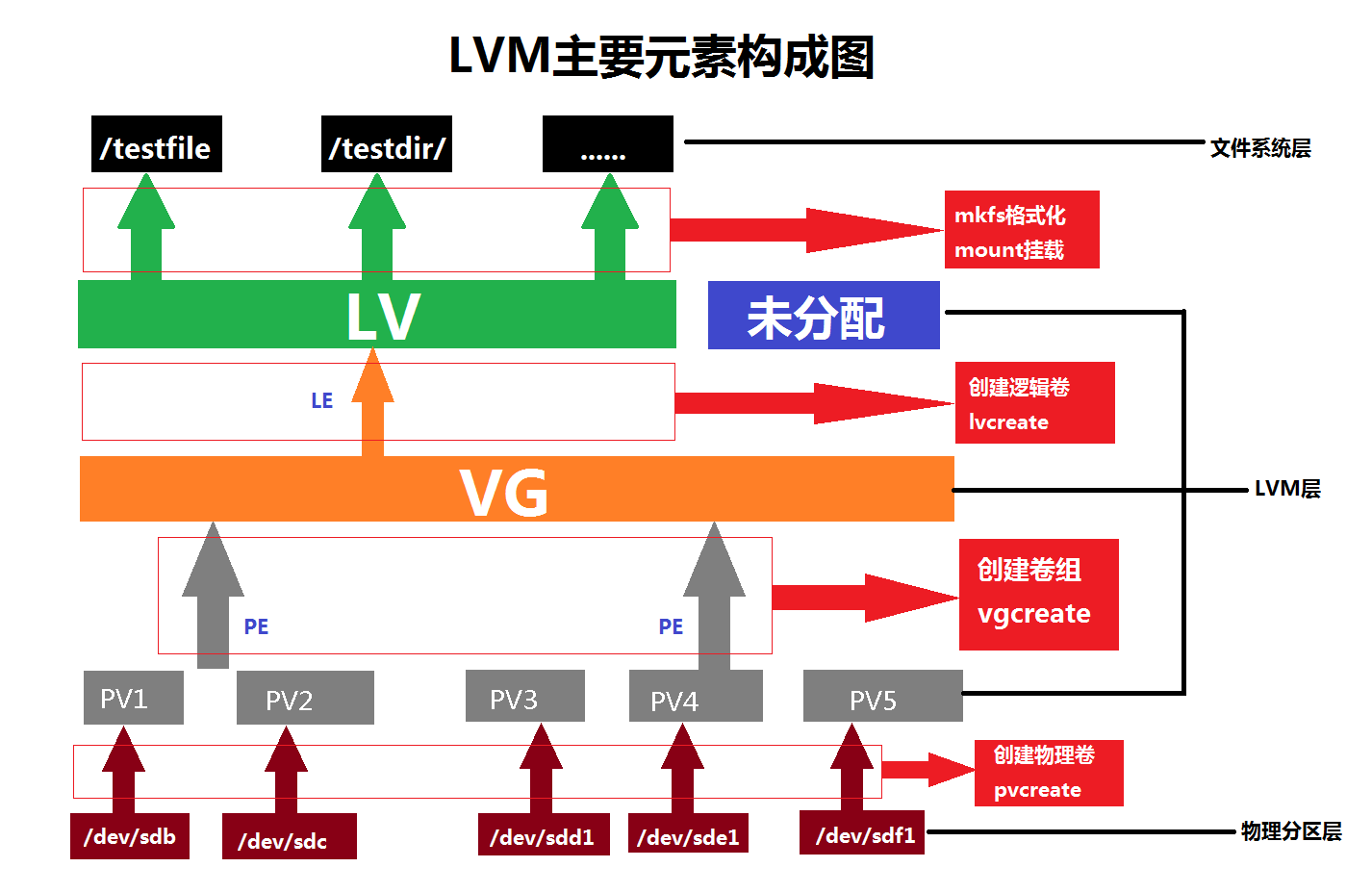

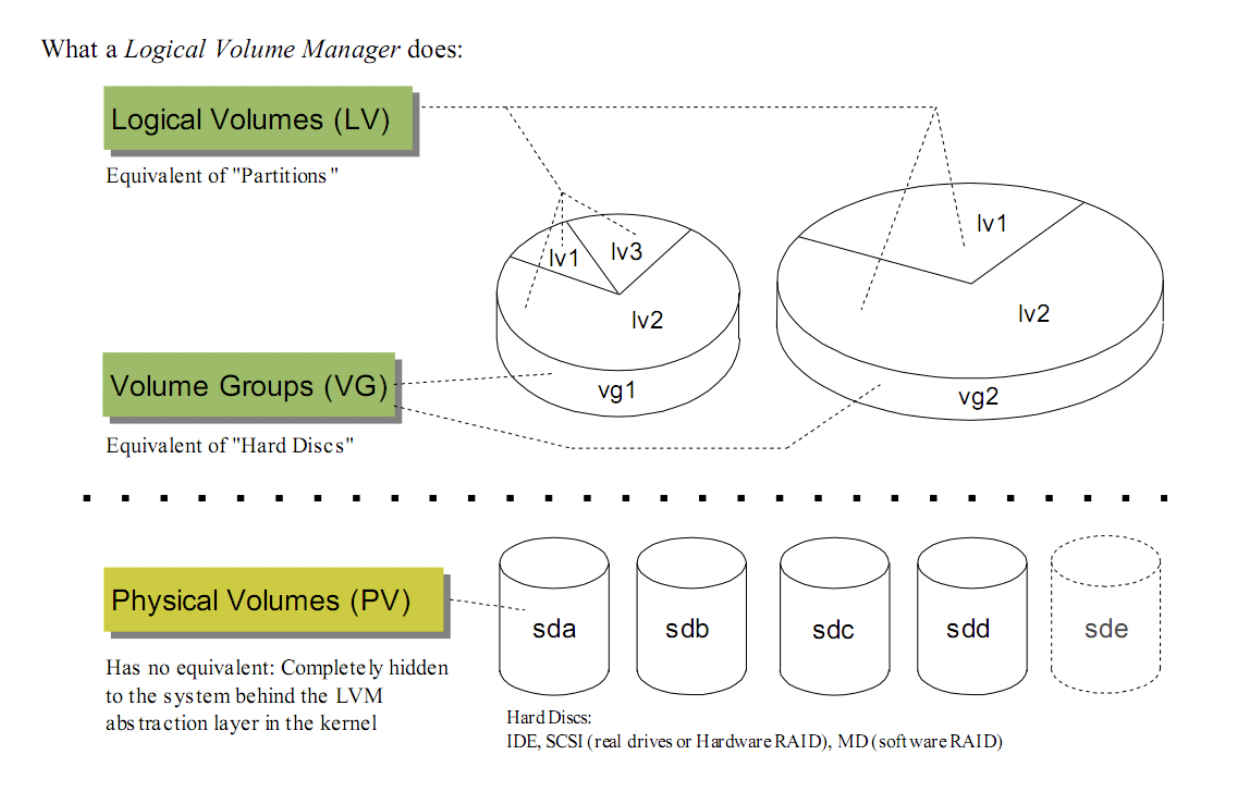

Linux LVM使用 LVM是 Logical Volume Manager(逻辑卷管理 )的简写, 用来解决磁盘分区大小动态分配。LVM不是软RAID(Redundant Array of Independent Disks)。

从一块硬盘到能使用LV文件系统的步骤:

硬盘—-分区(fdisk)—-PV(pvcreate)—-VG(vgcreate)—-LV(lvcreate)—-格式化(mkfs.ext4 LV为ext文件系统)—-挂载

LVM磁盘管理方式

lvreduce 缩小LV

先卸载—>然后减小逻辑边界—->最后减小物理边界—>在检测文件系统 ==谨慎用==

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [aliyun@uos15 15:07 /dev/disk/by-label] $sudo e2label /dev/nvme0n1p1 polaru01 //给磁盘打标签 [aliyun@uos15 15:07 /dev/disk/by-label] $lsblk -f NAME FSTYPE LABEL UUID FSAVAIL FSUSE% MOUNTPOINT sda ├─sda1 vfat EFI D0E3-79A8 299M 0% /boot/efi ├─sda2 ext4 Boot f204c992-fb20-40e1-bf58-b11c994ee698 1.3G 6% /boot ├─sda3 ext4 Roota dbc68010-8c36-40bf-b794-271e59ff5727 14.8G 61% / ├─sda4 ext4 Rootb 73fe0ac6-ff6b-46cc-a609-c574be026e8f ├─sda5 ext4 _dde_data 798fce56-fc82-4f59-bcaa-d2ed5c48da8d 42.1G 54% /data ├─sda6 ext4 Backup 267dc7a8-1659-4ccc-b7dc-5f2cd80f4e4e 3.7G 57% /recovery └─sda7 swap SWAP 7a5632dc-bc7b-410e-9a50-07140f20cd13 [SWAP] nvme0n1 └─nvme0n1p1 ext4 polaru01 762a5700-8cf1-454a-b385-536b9f63c25d 413.4G 54% /u01 nvme1n1 xfs u02 8ddf19c4-fe71-4428-b2aa-e45acf08050c nvme2n1 xfs u03 2b8625b4-c67d-4f1e-bed6-88814adfd6cc nvme3n1 ext4 u01 cda85750-c4f7-402e-a874-79cb5244d4e1

LVM 创建、扩容 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 sudo vgcreate vg1 /dev/nvme0n1 /dev/nvme1n1 //两块物理磁盘上创建vg1 如果报错: Can't open /dev/nvme1n1 exclusively. Mounted filesystem? Can't open /dev/nvme0n1 exclusively. Mounted filesystem? 是说/dev/nvme0n1已经mounted了,需要先umount vgdisplay sudo lvcreate -L 5T -n u03 vg1 //在虚拟volume-group vg1上创建一个5T大小的分区or: sudo lvcreate -l 100%free -n u03 vg1 sudo mkfs.ext4 /dev/vg1/u03 sudo mkdir /lvm sudo fdisk -l sudo umount /lvm sudo lvresize -L 5.8T /dev/vg1/u03 //lv 扩容,但此时还未生效;缩容的话风险较大,且需要先 umount sudo e2fsck -f /dev/vg1/u03 sudo resize2fs /dev/vg1/u03 //触发生效 sudo mount /dev/vg1/u03 /lvm cd /lvm/ lvdisplay sudo vgdisplay vg1 lsblk -l lsblk sudo vgextend vg1 /dev/nvme3n1 //vg 扩容, 增加一块磁盘到vg1 ls /u01 sudo vgdisplay sudo fdisk -l sudo pvdisplay sudo lvcreate -L 1T -n lv2 vg1 //从vg1中再分配一块1T大小的磁盘 sudo lvdisplay sudo mkfs.ext4 /dev/vg1/lv2 mkdir /lv2 ls / sudo mkdir /lv2 sudo mount /dev/vg1/lv2 /lv2 df -lh //手工创建lvm 1281 18/05/22 11:04:22 ls -l /dev/|grep -v ^l|awk '{print $NF}'|grep -E "^nvme[7-9]{1,2}n1$|^df[a-z]$|^os[a-z]$" 1282 18/05/22 11:05:06 vgcreate -s 32 vgbig /dev/nvme7n1 /dev/nvme8n1 /dev/nvme9n1 1283 18/05/22 11:05:50 vgcreate -s 32 vgbig /dev/nvme7n1 /dev/nvme8n1 /dev/nvme9n1 1287 18/05/22 11:07:59 lvcreate -A y -I 128K -l 100%FREE -i 3 -n big vgbig 1288 18/05/22 11:08:02 df -h 1289 18/05/22 11:08:21 lvdisplay 1290 18/05/22 11:08:34 df -lh 1291 18/05/22 11:08:42 df -h 1292 18/05/22 11:09:05 mkfs.ext4 /dev/vgbig/big -m 0 -O extent,uninit_bg -E lazy_itable_init=1 -q -L big -J size=4000 1298 18/05/22 11:10:28 mkdir -p /big 1301 18/05/22 11:12:11 mount /dev/vgbig/big /big

创建LVM 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 function create_polarx_lvm_V62(){ vgremove vgpolarx #sed -i "97 a\ types = ['nvme', 252]" /etc/lvm/lvm.conf parted -s /dev/nvme0n1 rm 1 parted -s /dev/nvme1n1 rm 1 parted -s /dev/nvme2n1 rm 1 parted -s /dev/nvme3n1 rm 1 dd if=/dev/zero of=/dev/nvme0n1 count=10000 bs=512 dd if=/dev/zero of=/dev/nvme1n1 count=10000 bs=512 dd if=/dev/zero of=/dev/nvme2n1 count=10000 bs=512 dd if=/dev/zero of=/dev/nvme3n1 count=10000 bs=512 #lvmdiskscan vgcreate -s 32 vgpolarx /dev/nvme0n1 /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1 lvcreate -A y -I 16K -l 100%FREE -i 4 -n polarx vgpolarx mkfs.ext4 /dev/vgpolarx/polarx -m 0 -O extent,uninit_bg -E lazy_itable_init=1 -q -L polarx -J size=4000 sed -i "/polarx/d" /etc/fstab mkdir -p /polarx echo "LABEL=polarx /polarx ext4 defaults,noatime,data=writeback,nodiratime,nodelalloc,barrier=0 0 0" >> /etc/fstab mount -a } create_polarx_lvm_V62

-I 64K 值条带粒度,默认64K,mysql pagesize 16K,所以最好16K

默认创建的是 linear,一次只用一块盘,不能累加多快盘的iops能力:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 #lvcreate -h lvcreate - Create a logical volume Create a linear LV. lvcreate -L|--size Size[m|UNIT] VG [ -l|--extents Number[PERCENT] ] [ --type linear ] [ COMMON_OPTIONS ] [ PV ... ] Create a striped LV (infers --type striped). lvcreate -i|--stripes Number -L|--size Size[m|UNIT] VG [ -l|--extents Number[PERCENT] ] [ -I|--stripesize Size[k|UNIT] ] [ COMMON_OPTIONS ] [ PV ... ] Create a raid1 or mirror LV (infers --type raid1|mirror). lvcreate -m|--mirrors Number -L|--size Size[m|UNIT] VG [ -l|--extents Number[PERCENT] ] [ -R|--regionsize Size[m|UNIT] ] [ --mirrorlog core|disk ] [ --minrecoveryrate Size[k|UNIT] ] [ --maxrecoveryrate Size[k|UNIT

remount 正常使用中的文件系统是不能被umount的,如果需要修改mount参数的话可以考虑用mount 的 -o remount 参数

1 2 3 4 5 6 [root@ky3 ~]# mount -o lazytime,remount /polarx/ //增加lazytime参数 [root@ky3 ~]# mount -t ext4 /dev/mapper/vgpolarx-polarx on /polarx type ext4 (rw,noatime,nodiratime,lazytime,nodelalloc,nobarrier,stripe=128,data=writeback) [root@ky3 ~]# mount -o rw,remount /polarx/ //去掉刚加的lazytime 参数 [root@ky3 ~]# mount -t ext4 /dev/mapper/vgpolarx-polarx on /polarx type ext4 (rw,noatime,nodiratime,nodelalloc,nobarrier,stripe=128,data=writeback)

remount 时要特别小心,会大量回收 slab 等导致sys CPU 100% 打挂整机,remount会导致slab回收等,请谨慎执行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 [2023-10-26 15:04:49][kernel][info]EXT4-fs (dm-0): re-mounted. Opts: lazytime,data=writeback,nodelalloc,barrier=0,nolazytime [2023-10-26 15:04:49][kernel][info]EXT4-fs (dm-1): re-mounted. Opts: lazytime,data=writeback,nodelalloc,barrier=0,nolazytime [2023-10-26 15:05:16][kernel][warning]Modules linked in: ip_tables tcp_diag inet_diag venice_reduce_print(OE) bianque_driver(OE) 8021q garp mrp bridge stp llc ip6_tables tcp_rds_rt_j(OE) tcp_rt_base(OE) slb_vctk(OE) slb_vtoa(OE) hookers slb_ctk_proxy(OE) slb_ctk_session(OE) slb_ctk_debugfs(OE) loop nf_conntrack fuse btrfs zlib_deflate raid6_pq xor vfat msdos fat xfs libcrc32c ext3 jbd dm_mod khotfix_D902467(OE) kpatch_D537536(OE) kpatch_D793896(OE) kpatch_D608634(OE) kpatch_D629788(OE) kpatch_D820113(OE) kpatch_D723518(OE) kpatch_D616841(OE) kpatch_D602147(OE) kpatch_D523456(OE) kpatch_D559221(OE) ipflt(OE) kpatch_D656712(OE) kpatch_D753272(OE) kpatch_D813404(OE) i40e kpatch_D543129(OE) kpatch_D645707(OE) kpatch(OE) rpcrdma(OE) xprtrdma(OE) ib_isert(OE) ib_iser(OE) ib_srpt(OE) ib_srp(OE) ib_ipoib(OE) ib_addr(OE) ib_sa(OE) [2023-10-26 15:05:16][kernel][warning]ib_mad(OE) bonding iTCO_wdt iTCO_vendor_support intel_powerclamp coretemp intel_rapl kvm_intel kvm crc32_pclmul ghash_clmulni_intel aesni_intel lrw gf128mul glue_helper ablk_helper cryptd ipmi_devintf pcspkr sg lpc_ich mfd_core i2c_i801 shpchp wmi ipmi_si ipmi_msghandler acpi_pad acpi_power_meter binfmt_misc aocblk(OE) mlx5_ib(OE) ext4 mbcache jbd2 crc32c_intel ast(OE) syscopyarea mlx5_core(OE) sysfillrect sysimgblt ptp i2c_algo_bit pps_core drm_kms_helper aocnvm(OE) vxlan ttm aocmgr(OE) ip6_udp_tunnel udp_tunnel drm i2c_core sd_mod crc_t10dif crct10dif_generic crct10dif_pclmul crct10dif_common ahci libahci libata rdma_ucm(OE) rdma_cm(OE) iw_cm(OE) ib_umad(OE) ib_ucm(OE) ib_uverbs(OE) ib_cm(OE) ib_core(OE) mlx_compat(OE) [last unloaded: ip_tables] [2023-10-26 15:05:16][kernel][warning]CPU: 85 PID: 105195 Comm: mount Tainted: G W OE K------------ 3.10.0-327.ali2017.alios7.x86_64 #1 [2023-10-26 15:05:16][kernel][warning]Hardware name: Foxconn AliServer-Thor-04-12U-v2/Thunder2.0 2U, BIOS 1.0.PL.FC.P.026.05 03/04/2020 [2023-10-26 15:05:16][kernel][warning]task: ffff8898016c5b70 ti: ffff88b2b5094000 task.ti: ffff88b2b5094000 [2023-10-26 15:05:16][kernel][warning]RIP: 0010:[<ffffffff81656502>] [<ffffffff81656502>] _raw_spin_lock+0x12/0x50 [2023-10-26 15:05:16][kernel][warning]RSP: 0018:ffff88b2b5097d98 EFLAGS: 00000202 [2023-10-26 15:05:16][kernel][warning]RAX: 0000000000160016 RBX: ffffffff81657696 RCX: 007d44c33c3e3c3e [2023-10-26 15:05:16][kernel][warning]RDX: 007d44c23c3e3c3e RSI: 00000000007d44c3 RDI: ffff88b0247b67d8 [2023-10-26 15:05:16][kernel][warning]RBP: ffff88b2b5097d98 R08: 0000000000000000 R09: 0000000000000007 [2023-10-26 15:05:16][kernel][warning]R10: ffff88b0247a7bc0 R11: 0000000000000000 R12: ffffffff81657696 [2023-10-26 15:05:16][kernel][warning]R13: ffff88b2b5097d80 R14: ffffffff81657696 R15: ffff88b2b5097d78 [2023-10-26 15:05:16][kernel][warning]FS: 00007ff7d3f4f880(0000) GS:ffff88bd6a340000(0000) knlGS:0000000000000000 [2023-10-26 15:05:16][kernel][warning]CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 [2023-10-26 15:05:16][kernel][warning]CR2: 00007fff5286b000 CR3: 0000008177750000 CR4: 00000000003607e0 [2023-10-26 15:05:16][kernel][warning]DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 [2023-10-26 15:05:16][kernel][warning]DR3: 0000000000000000 DR6: 00000000fffe0ff0 DR7: 0000000000000400 [2023-10-26 15:05:16][kernel][warning]Call Trace: [2023-10-26 15:05:16][kernel][warning][<ffffffff812085df>] shrink_dentry_list+0x4f/0x480 [2023-10-26 15:05:16][kernel][warning][<ffffffff81208a9c>] shrink_dcache_sb+0x8c/0xd0 [2023-10-26 15:05:16][kernel][warning][<ffffffff811f3a7c>] do_remount_sb+0x4c/0x1a0 [2023-10-26 15:05:16][kernel][warning][<ffffffff81212519>] do_mount+0x6a9/0xa40 [2023-10-26 15:05:16][kernel][warning][<ffffffff8117830e>] ? __get_free_pages+0xe/0x50 [2023-10-26 15:05:16][kernel][warning][<ffffffff81212946>] SyS_mount+0x96/0xf0 [2023-10-26 15:05:16][kernel][warning][<ffffffff816600fd>] system_call_fastpath+0x16/0x1b [2023-10-26 15:05:16][kernel][warning]Code: f6 47 02 01 74 e5 0f 1f 00 e8 a6 17 ff ff eb db 66 0f 1f 84 00 00 00 00 00 0f 1f 44 00 00 55 48 89 e5 b8 00 00 02 00 f0 0f c1 07 <89> c2 c1 ea 10 66 39 c2 75 02 5d c3 83 e2 fe 0f b7 f2 b8 00 80 [2023-10-26 15:05:44][kernel][emerg]BUG: soft lockup - CPU#85 stuck for 23s! [mount:105195]

复杂版创建LVM 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 function disk_part(){ set -e if [ $# -le 1 ] then echo "disk_part argument error" exit -1 fi action=$1 disk_device_list=(`echo $*`) echo $disk_device_list unset disk_device_list[0] echo $action echo ${disk_device_list[*]} len=`echo ${#disk_device_list[@]}` echo "start remove origin partition " for dev in ${disk_device_list[@]} do #echo ${dev} `parted -s ${dev} rm 1` || true dd if=/dev/zero of=${dev} count=100000 bs=512 done # 替换98行,插入的话r改成a sed -i "98 r\ types = ['aliflash' , 252 , 'nvme' ,252 , 'venice', 252 , 'aocblk', 252]" /etc/lvm/lvm.conf sed -i "/flash/d" /etc/fstab if [ x${1} == x"split" ] then echo "split disk " #lvmdiskscan echo ${disk_device_list} #vgcreate -s 32 vgpolarx /dev/nvme0n1 /dev/nvme2n1 vgcreate -s 32 vgpolarx ${disk_device_list[*]} #stripesize 16K 和MySQL pagesize适配 #lvcreate -A y -I 16K -l 100%FREE -i 2 -n polarx vgpolarx lvcreate -A y -I 16K -l 100%FREE -i ${#disk_device_list[@]} -n polarx vgpolarx #lvcreate -A y -I 128K -l 75%VG -i ${len} -n volume1 vgpolarx #lvcreate -A y -I 128K -l 100%FREE -i ${len} -n volume2 vgpolarx mkfs.ext4 /dev/vgpolarx/polarx -m 0 -O extent,uninit_bg -E lazy_itable_init=1 -q -L polarx -J size=4000 sed -i "/polarx/d" /etc/fstab mkdir -p /polarx opt="defaults,noatime,data=writeback,nodiratime,nodelalloc,barrier=0" echo "LABEL=polarx /polarx ext4 ${opt} 0 0" >> /etc/fstab mount -a else echo "unkonw action " fi } function format_nvme_mysql(){ if [ `df |grep flash|wc -l` -eq $1 ] then echo "check success" echo "start umount partition " parttion_list=`df |grep flash|awk -F ' ' '{print $1}'` for partition in ${parttion_list[@]} do echo $partition umount $partition done else echo "check host fail" exit -1 fi disk_device_list=(`ls -l /dev/|grep -v ^l|awk '{print $NF}'|grep -E "^nvme[0-9]{1,2}n1$|^df[a-z]$|^os[a-z]$"`) full_disk_device_list=() for i in ${!disk_device_list[@]} do echo ${i} full_disk_device_list[${i}]=/dev/${disk_device_list[${i}]} done echo ${full_disk_device_list[@]} disk_part split ${full_disk_device_list[@]} } if [ ! -d "/polarx" ]; then umount /dev/vgpolarx/polarx vgremove -f vgpolarx dmsetup --force --retry --deferred remove vgpolarx-polarx format_nvme_mysql $1 else echo "the lvm exists." fi

LVM性能还没有做到多盘并行,也就是性能和单盘差不多,盘数多读写性能也一样

查看 lvcreate 使用的参数:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 #lvs -o +lv_full_name,devices,stripe_size,stripes LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert LV Devices Stripe #Str drds vg1 -wi-ao---- 5.37t vg1/drds /dev/nvme0n1p1(0) 0 1 drds vg1 -wi-ao---- 5.37t vg1/drds /dev/nvme2n1p1(0) 0 1 # lvs -v --segments LV VG Attr Start SSize #Str Type Stripe Chunk polarx vgpolarx -wi-ao---- 0 11.64t 4 striped 128.00k 0 # lvdisplay -m --- Logical volume --- LV Path /dev/vgpolarx/polarx LV Name polarx VG Name vgpolarx LV UUID Wszlwf-SCjv-Txkw-9B1t-p82Z-C0Zl-oJopor LV Write Access read/write LV Creation host, time ky4, 2022-08-18 15:53:29 +0800 LV Status available # open 1 LV Size 11.64 TiB Current LE 381544 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 2048 Block device 254:0 --- Segments --- Logical extents 0 to 381543: Type striped Stripes 4 Stripe size 128.00 KiB Stripe 0: Physical volume /dev/nvme1n1 Physical extents 0 to 95385 Stripe 1: Physical volume /dev/nvme3n1 Physical extents 0 to 95385 Stripe 2: Physical volume /dev/nvme2n1 Physical extents 0 to 95385 Stripe 3: Physical volume /dev/nvme0n1 Physical extents 0 to 95385

==要特别注意 stripes 表示多快盘一起用,iops能力累加,但是默认 stripes 是1,也就是只用1块盘,也就是linear==

安装LVM 1 sudo yum install lvm2 -y

dmsetup查看LVM 管理工具dmsetup是 Device mapper in the kernel 中的一个

1 2 dmsetup ls dmsetup info /dev/dm-0

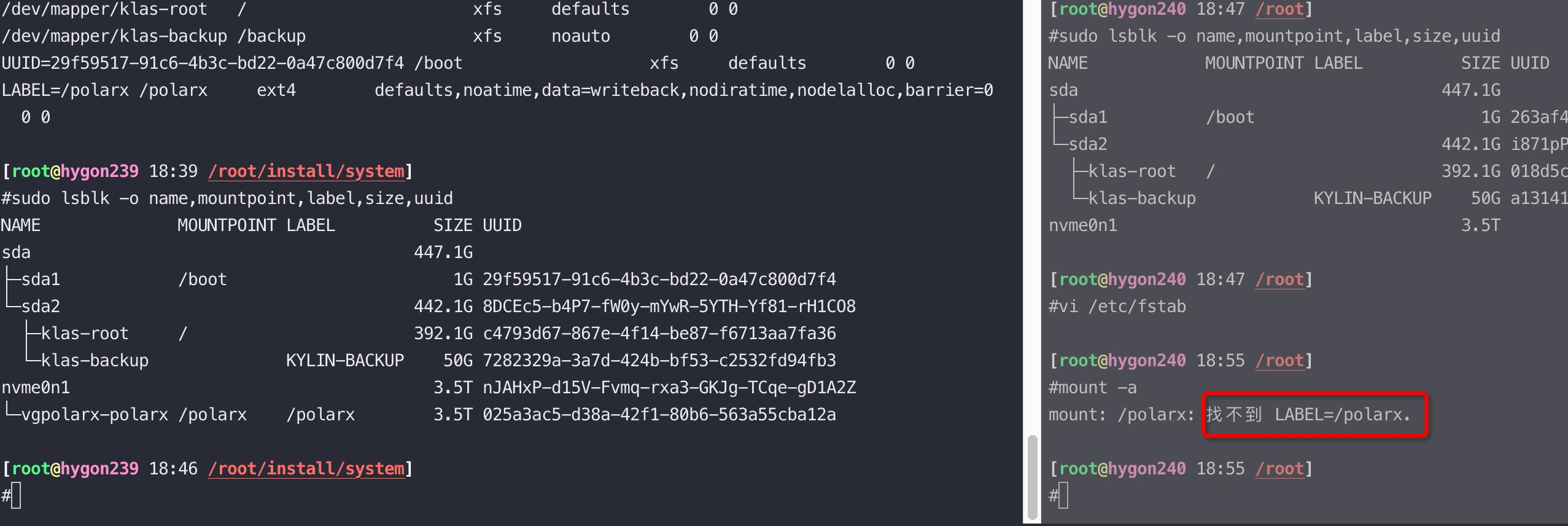

reboot 失败 在麒麟下OS reboot的时候可能因为mount: /polarx: 找不到 LABEL=/polarx. 导致OS无法启动,可以进入紧急模式,然后注释掉 /etc/fstab 中的polarx 行,再reboot

这是因为LVM的label、uuid丢失了,导致挂载失败。

查看设备的label

1 sudo lsblk -o name,mountpoint,label,size,uuid or lsblk -f

修复:

紧急模式下修改 /etc/fstab 去掉有问题的挂载; 修改标签

1 2 3 4 5 6 7 8 #blkid //查询uuid、label /dev/mapper/klas-root: UUID="c4793d67-867e-4f14-be87-f6713aa7fa36" BLOCK_SIZE="512" TYPE="xfs" /dev/sda2: UUID="8DCEc5-b4P7-fW0y-mYwR-5YTH-Yf81-rH1CO8" TYPE="LVM2_member" PARTUUID="4ffd9bfa-02" /dev/nvme0n1: UUID="nJAHxP-d15V-Fvmq-rxa3-GKJg-TCqe-gD1A2Z" TYPE="LVM2_member" /dev/sda1: UUID="29f59517-91c6-4b3c-bd22-0a47c800d7f4" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="4ffd9bfa-01" /dev/mapper/vgpolarx-polarx: LABEL="polarx" UUID="025a3ac5-d38a-42f1-80b6-563a55cba12a" BLOCK_SIZE="4096" TYPE="ext4" e2label /dev/mapper/vgpolarx-polarx polarx

比如,下图右边的是启动失败的

mdadm(multiple devices admin)是一个非常有用的管理软raid的工具,可以用它来创建、管理、监控raid设备,当用mdadm来创建磁盘阵列时,可以使用整块独立的磁盘(如/dev/sdb,/dev/sdc),也可以使用特定的分区(/dev/sdb1,/dev/sdc1)

mdadm使用手册

mdadm –create device –level=Y –raid-devices=Z devices

创建 -l 0表示raid0, -l 10表示raid10

1 2 3 4 5 6 7 8 9 10 mdadm -C /dev/md0 -a yes -l 0 -n2 /dev/nvme{6,7}n1 //raid0 mdadm -D /dev/md0 mkfs.ext4 /dev/md0 mkdir /md0 mount /dev/md0 /md0 //条带 mdadm --create --verbose /dev/md0 --level=linear --raid-devices=2 /dev/sdb /dev/sdc 检查 mdadm -E /dev/nvme[0-5]n1

删除

1 2 umount /md0 mdadm -S /dev/md0

监控raid

1 2 3 4 5 6 7 8 9 #cat /proc/mdstat Personalities : [raid0] [raid6] [raid5] [raid4] md6 : active raid6 nvme3n1[3] nvme2n1[2] nvme1n1[1] nvme0n1[0] 7501211648 blocks super 1.2 level 6, 512k chunk, algorithm 2 [4/4] [UUUU] [=>...................] resync = 7.4% (280712064/3750605824) finish=388.4min speed=148887K/sec bitmap: 28/28 pages [112KB], 65536KB chunk //raid6一直在异步刷数据 md0 : active raid0 nvme7n1[3] nvme6n1[2] nvme4n1[0] nvme5n1[1] 15002423296 blocks super 1.2 512k chunks

控制刷盘速度

1 2 3 #sysctl -a |grep raid dev.raid.speed_limit_max = 0 dev.raid.speed_limit_min = 0

nvme-cli 1 2 3 4 nvme id-ns /dev/nvme1n1 -H for i in `seq 0 1 2`; do nvme format --lbaf=3 /dev/nvme${i}n1 ; done //格式化,选择不同的扇区大小,默认512,可选4K fuser -km /data/

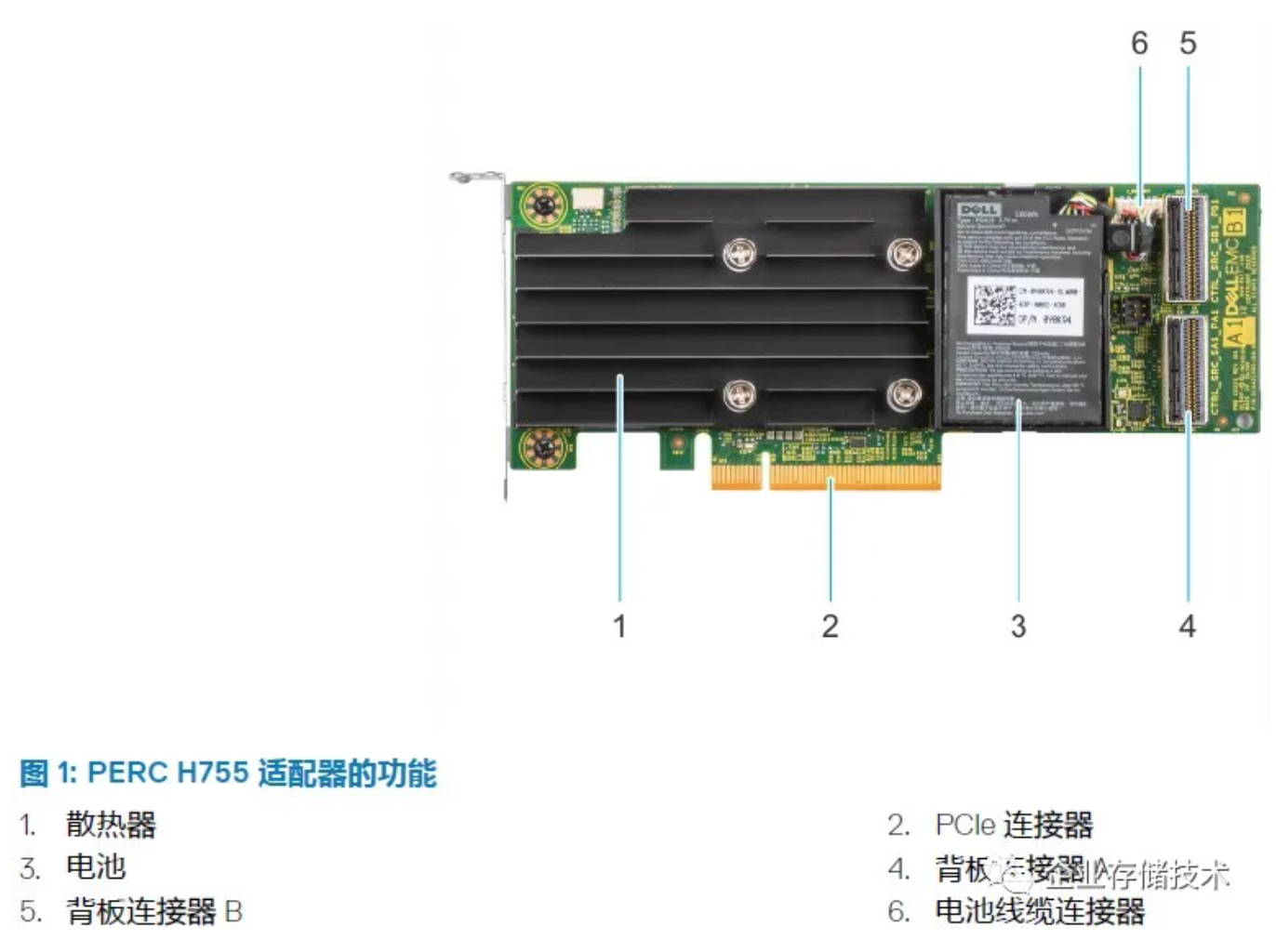

raid硬件卡 raid卡外观

mount 参数对性能的影响 推荐mount参数:defaults,noatime,data=writeback,nodiratime,nodelalloc,barrier=0 这些和 default 0 0 的参数差别不大,但是如果加了lazytime 会在某些场景下性能很差

比如在mysql filesort 场景下就可能触发 find_inode_nowait 热点,MySQL filesort 过程中,对文件的操作时序是 create,open,unlink,write,read,close; 而文件系统的 lazytime 选项,在发现 inode 进行修改了之后,会对同一个 inode table 中的 inode 进行修改,导致 file_inode_nowait 函数中,spin lock 的热点。

所以mount时注意不要有 lazytime

如果一个SQL 要创建大量临时表,而 /tmp/ 挂在参数有lazytime的话也会导致同样的问题,如图堆栈:

对应的内核代码:

另外一个应用,也经常因为find_inode_nowait 热点把CPU 爆掉:

lazytime 的问题可以通过代码复现:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 #include <fcntl.h> #include <sys/stat.h> #include <sys/types.h> #include <unistd.h> #include "pthread.h" #include "stdio.h" #include "stdlib.h" #include <atomic> #include <string> typedef unsigned long long ulonglong;static const char *FILE_PREFIX = "stress" ;static const char *FILE_DIR = "/flash4/tmp/" ;static std ::atomic<ulonglong> f_num (0 ) ;static constexpr size_t THREAD_NUM = 128 ;static constexpr ulonglong LOOP = 1000000000000 ;void file_op (const char *file_name) { int f; char content[1024 ]; content[0 ] = 'a' ; content[500 ] = 'b' ; content[1023 ] = 'c' ; f = open(file_name, O_RDWR | O_CREAT); unlink(file_name); for (ulonglong i = 0 ; i < 1024 * 16 ; i++) { write(f, content, 1024 ); } close(f); } void *handle (void *data) { char file[1024 ]; ulonglong current_id; for (ulonglong i = 0 ; i < LOOP; i++) { current_id = f_num++; snprintf (file, 1024 , "%s%s_%d.txt" , FILE_DIR, FILE_PREFIX, current_id); file_op(file); } } int main (int argc, char ** args) { for (std ::size_t i = 0 ; i < THREAD_NUM; i++) { pthread_t tid; int ret = pthread_create(&tid, NULL , handle, NULL ); } }

主动;工具、生产效率;面向故障、事件

LVM 异常修复 文件系统损坏,是导致系统启动失败比较常见的原因。文件系统损坏,比较常见的原因是分区丢失和文件系统需要手工修复。

1、分区表丢失,只要重新创建分区表即可。因为分区表信息只涉及变更磁盘上第一个扇区指定位置的内容。所以只要确认有分区情况,在分区表丢失的情况下,重做分区是不会损坏磁盘上的数据的。但是分区起始位置和尺寸需要正确 。起始位置确定后,使用fdisk重新分区即可。所以,问题的关键是如何确定分区的开始位置。

确定分区起始位置: MBR(Master Boot Record)是指磁盘第一块扇区上的一种数据结构,512字节,磁盘分区数据是MBR的一部分,可以使用通过dd if=/dev/vdc bs=512 count=1 | hexdump -C 以16进制列出扇区0的裸数据:

可以看出磁盘的分区类型ID、分区起始扇区和分区包含扇区数量,通过这几个数值可以确定分区位置。后面LVM可以通过LABELONE计算出起始位置。

参考资料 https://www.tecmint.com/manage-and-create-lvm-parition-using-vgcreate-lvcreate-and-lvextend/

pvcreate error : Can’t open /dev/sdx exclusively. Mounted filesystem?

软RAID配置方法参考这里